Introduction#

Load data from  to

to  , the fastest way.

, the fastest way.

ConnectorX enables you to load data from databases into Python in the fastest and most memory efficient way. It is a Python package that provides a high-level interface to the popular database connectors. Here is our rust documentation: rust-docs.

What you need is one line of code:

import connectorx as cx

cx.read_sql("postgresql://username:password@server:port/database", "SELECT * FROM lineitem")

Optionally, you can accelerate the data loading using parallelism by specifying a partition column.

import connectorx as cx

cx.read_sql("postgresql://username:password@server:port/database", "SELECT * FROM lineitem", partition_on="l_orderkey", partition_num=10)

The function will partition the query by evenly splitting the specified column to the amount of partitions. ConnectorX will assign one thread for each partition to load and write data in parallel. Currently, we support partitioning on numerical columns (cannot contain NULL) for SPJA queries.

Experimental: We are now providing federated query support (PostgreSQL only and do not support partition for now), you can write a single query to join tables from two or more databases! (JRE >= 1.8 is required)

import connectorx as cx

db1 = "postgresql://username1:password1@server1:port1/database1"

db2 = "postgresql://username2:password2@server2:port2/database2"

cx.read_sql({"db1": db1, "db2": db2}, "SELECT * FROM db1.nation n, db2.region r where n.n_regionkey = r.r_regionkey")

Check out more detailed usage and examples here. A general introduction of the project can be found in this blog post.

Performance#

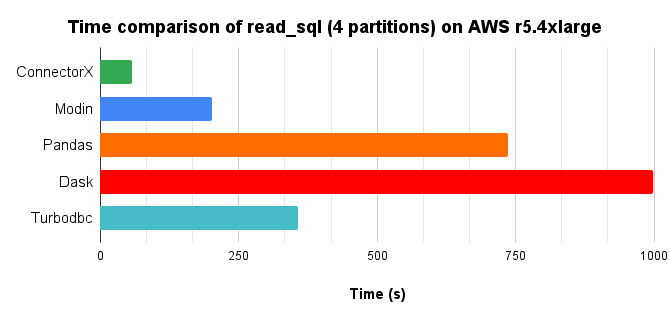

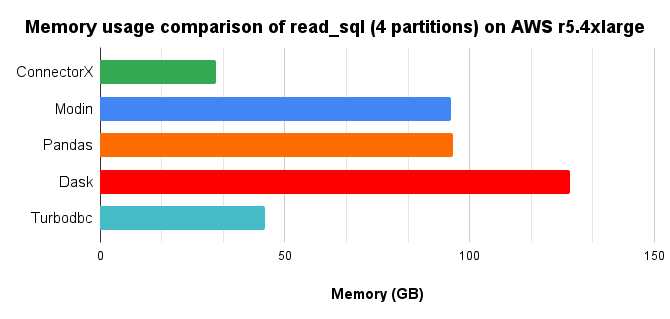

We compared different solutions in Python that provides the read_sql function, by loading a 10x TPC-H lineitem table (8.6GB) from Postgres into a DataFrame, with 4 cores parallelism.

Time chart, lower is better.#

Memory consumption chart, lower is better.#

In conclusion, ConnectorX uses up to 3x less memory and 21x less time (3x less memory and 13x less time compared with Pandas.). More benchmark result can be found under each database pages here.

How does ConnectorX achieve a lightening speed while keeping the memory footprint low?#

We observe that existing solutions more or less do data copy multiple times when downloading the data. Additionally, implementing a data intensive application in Python brings additional cost.

ConnectorX is written in Rust and follows “zero-copy” principle. This allows it to make full use of the CPU by becoming cache and branch predictor friendly. Moreover, the architecture of ConnectorX ensures the data will be copied exactly once, directly from the source to the destination.

How does ConnectorX download the data?#

Upon receiving the query, e.g. SELECT * FROM lineitem, ConnectorX will first issue a LIMIT 1 query SELECT * FROM lineitem LIMIT 1 to get the schema of the result set.

Then, if partition_on is specified, ConnectorX will issue SELECT MIN($partition_on), MAX($partition_on) FROM (SELECT * FROM lineitem) to know the range of the partition column.

After that, the original query is split into partitions based on the min/max information, e.g. SELECT * FROM (SELECT * FROM lineitem) WHERE $partition_on > 0 AND $partition_on < 10000.

ConnectorX will then run a count query to get the partition size (e.g. SELECT COUNT(*) FROM (SELECT * FROM lineitem) WHERE $partition_on > 0 AND $partition_on < 10000). If the partition

is not specified, the count query will be SELECT COUNT(*) FROM (SELECT * FROM lineitem).

Finally, ConnectorX will use the schema info as well as the count info to allocate memory and download data by executing the queries normally.

Once the downloading begins, there will be one thread for each partition so that the data are downloaded in parallel at the partition level. The thread will issue the query of the corresponding partition to the database and then write the returned data to the destination row-wise or column-wise (depends on the database) in a streaming fashion.

Supported Sources & Destinations#

Example connection string, supported protocols and data types for each data source can be found here.

For more planned data sources, please check out our discussion.

Sources#

Postgres

Mysql

Mariadb (through mysql protocol)

Sqlite

Redshift (through postgres protocol)

Clickhouse (through mysql protocol)

SQL Server

Azure SQL Database (through mssql protocol)

Oracle

Big Query

ODBC (WIP)

…

Destinations#

Pandas

PyArrow

Modin (through Pandas)

Dask (through Pandas)

Polars (through PyArrow)

Supports#

You are always welcomed to:

Ask questions in stackoverflow. Make sure to have #connectorx attached.

Ask questions & propose new ideas in our [forum][discussion_page].

Help us developing this project (adding databases and dataframes), please check out this guide.

Organizations and Projects using ConnectorX#

To add your project/organization here, reply our post here